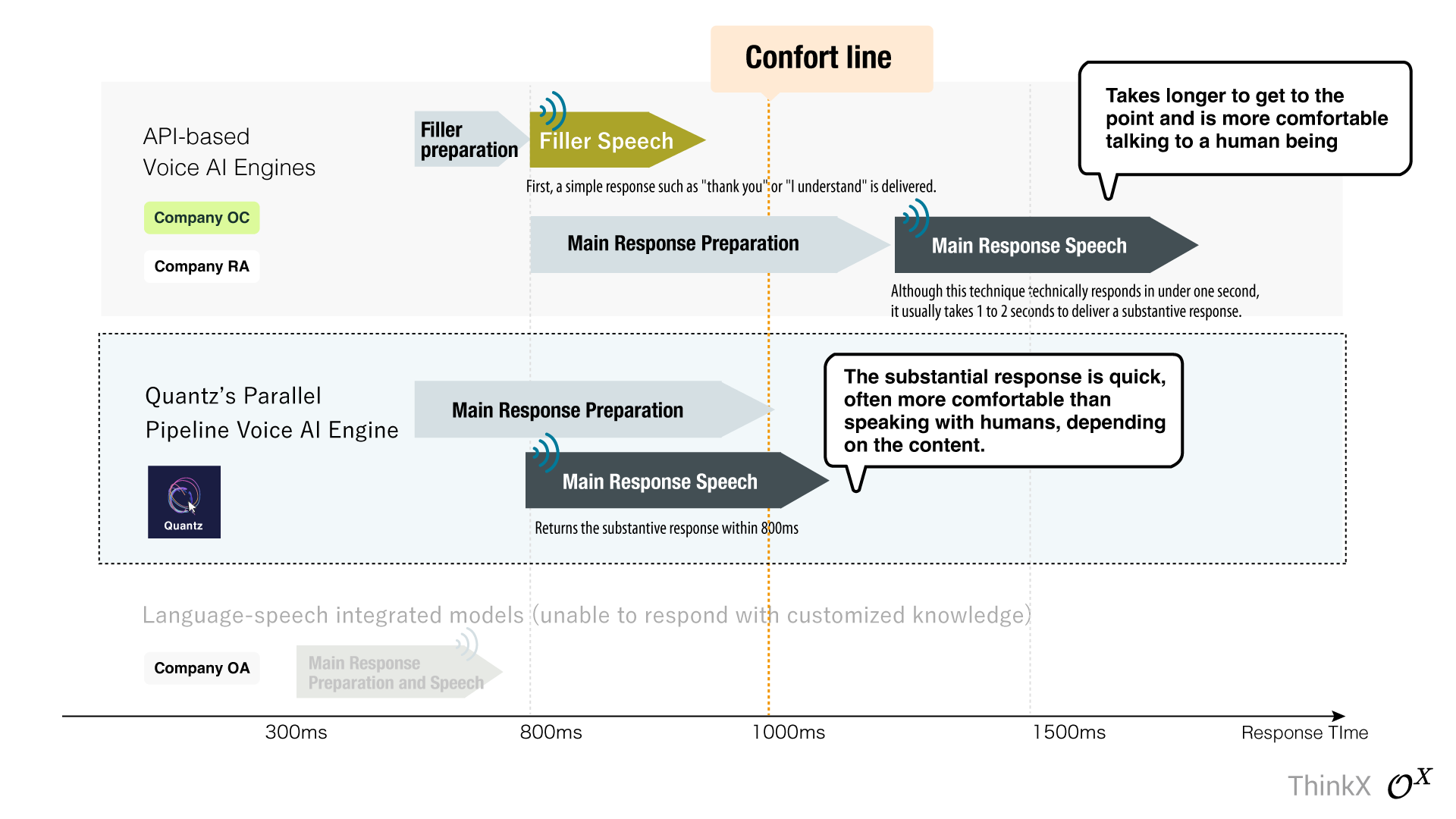

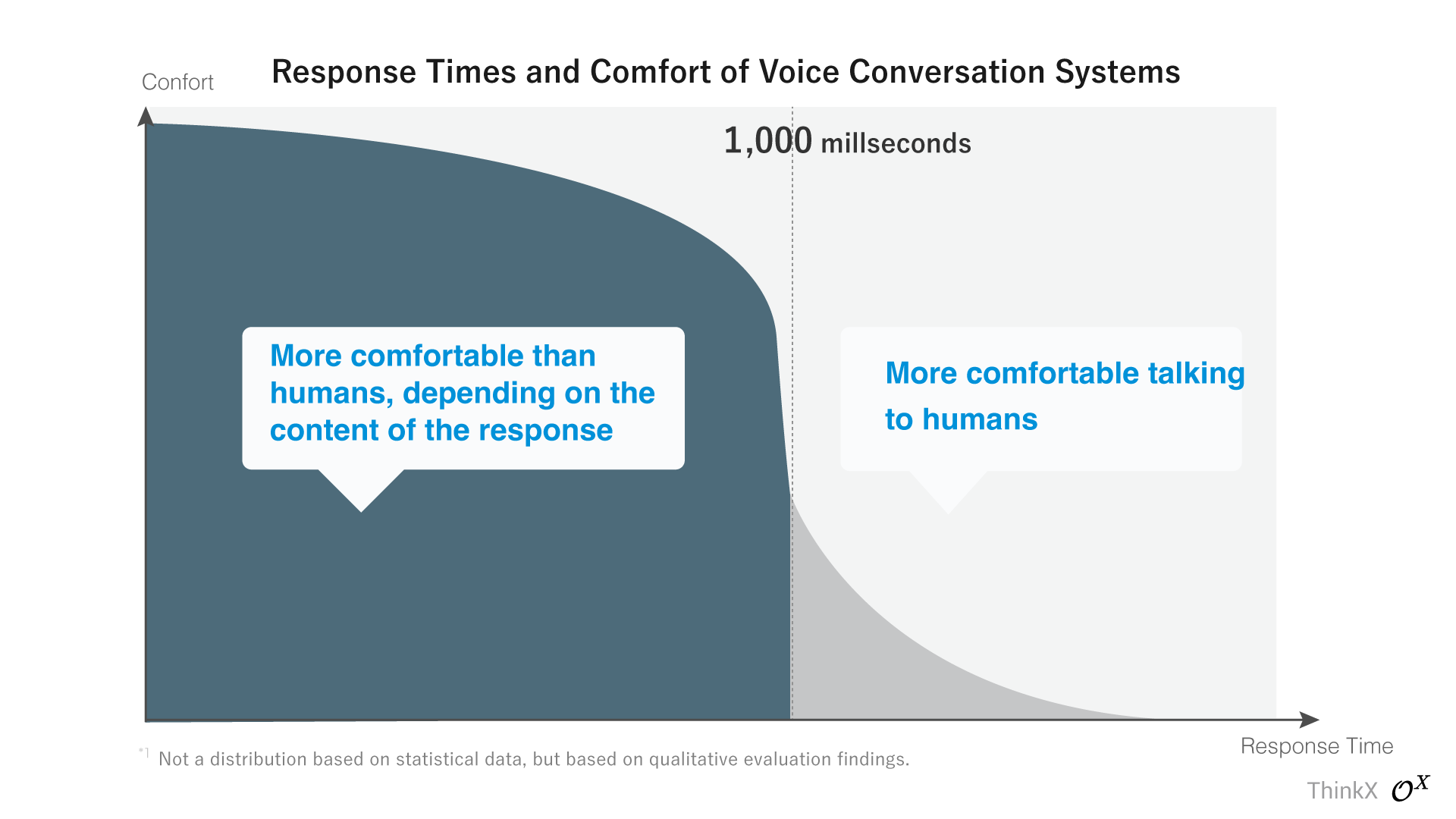

Quantz ® Voice-AI OS immediately delivers the substantive response, achieving a response time of just 800ms, avoiding the common 'filler technique' that relies on simple responses like 'thank you'.

Many typical API-based voice AI engines struggle to exceed the 'one-second barrier' and resort to using the 'filler technique'. This involves issuing a preliminary response such as 'thank you' or 'I understand' while the main response is being prepared. Although this approach may achieve a numerical response time of less than one second, it takes 1 to 2 seconds before the main topic is addressed, which can be frustrating during longer interactions.

Quantz ® Voice-AI OS, the world's first LLM-based voice AI engine, eschews this technique, delivering the main topic—the real response—within 800ms.

Exceeds the One-Second Barrier with a Real Response Time of 800ms.

Quantz ® Voice-AI OS is designed for 'true comfort', based on a proprietary codebase of 670,000 lines, including 4 patented technologies, 2 of which are pending.

It features a massive parallelism of the NLP (natural language processing) and speech processing pipelines, with a unique byte transfer protocol that reduces the overhead of TCP/IP communication and allows large amounts of data transfer to be completed on a single server.

The system is powered by dedicated GPU supercomputers managed by ThinkX, ensuring robust performance in a highly secure facility.

Our GPU Cluster Supercom3

Our GPU Cluster Supercom3

Voice-AI OS Optimized for 'True Comfort', Running on a High-Performance Dedicated GPU Supercomputer with a Proprietary Codebase of 670,000 Lines

Quantz ® Voice-AI OS is built on physical servers in data centres with high security requirements and does not use any external APIs such as ChatGPT API, Eleven Labs API, etc. (Models fine-tuned from the open source LLM Meta Llama3 are executed with 100~ parallel/GPU [2500 tokens~/sec] inference by a proprietary implementation of the LLM Parallel Inference Adapter).

This ensures the highest level of privacy protection and security for system users by not exposing critical, conversational information to external companies.

Highest Level of Data Security and Privacy—No External APIs, No Outgoing Conversational Data

Unlike systems that use a single integrated speech-language model, our modular approach to speech and language models allows for flexible customization of the pipeline. This flexibility includes capabilities such as mid-response monitoring and switching between utterances, all while maintaining scalability and high performance.

Modularized Language and Speech Models: Scalable and Customizable Pipeline

Quantz ® Voice-AI OS operates on dedicated physical servers, ensuring unparalleled data security by eliminating reliance on external APIs. This setup guarantees that no conversational data is transmitted externally, upholding the strictest privacy standards.

"Where Answers Arrive at the Speed of Thought"—Quantz delivers this promise through the intelligence of state-of-the-art LLM. Speak less and solve more with Quantz; it understands even the most concise queries and provides precise, to-the-point answers. It effortlessly handles complex, long sentences of over 30 words and delivers responses within an impressive 800ms.

Quantz ® Voice-AI OS strikes the optimum balance between customisation flexibility, stringent privacy standards and 'true comfort', providing fast and real responses to enhance user experience.

Our GPU Cluster Supercom3

Our GPU Cluster Supercom3